The "dictionary" definition of Data-Driven Quality (DDQ) is:

Application of data science techniques to a variety of data sources to assess and drive software quality.

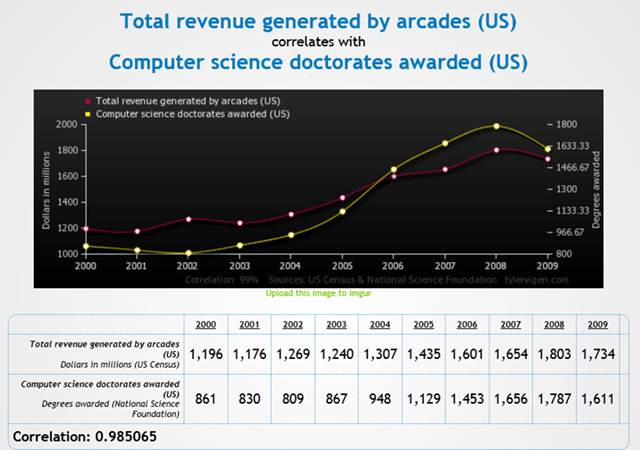

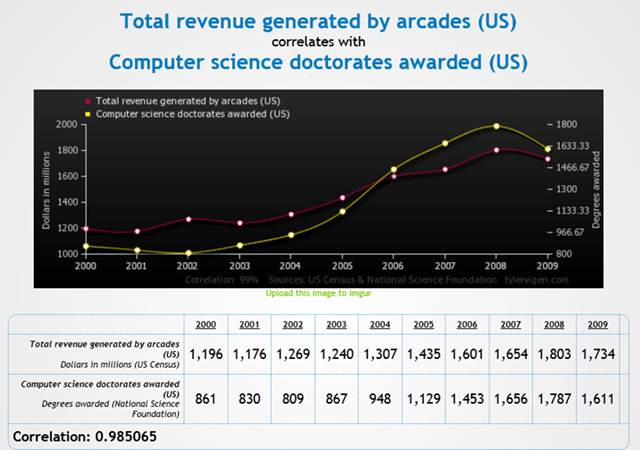

But it is really about questions and answers, specifically using data to find those answers. Trying to derive insights from data without knowing what you are looking for can be a source for new discoveries, but more often will yield mirages instead of insights, such as the following  [Source: tylervigen.com, used under CopyLeft license CC 4.0]

[Source: tylervigen.com, used under CopyLeft license CC 4.0]

(if I only had such data in 1983 I could have wasted even more of my quarter-fueled youth)

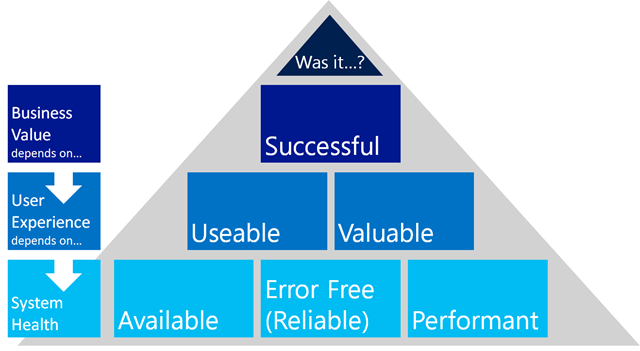

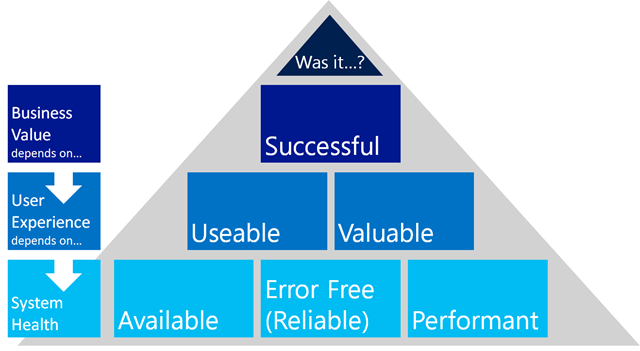

So what questions then? These are the questions to ask about your software:  In this diagram "it" is your software (service or product). The questions are divided into the three layers identified by the categories on the left:

In this diagram "it" is your software (service or product). The questions are divided into the three layers identified by the categories on the left:

- Business value: Does your software contribute to the bottom line and/or strategic goals?

- User experience: Does your software delight customers and beat the competition?

- System Health: Does your software work as designed?

Each category layer depends on the layer beneath it. Consider that it is difficult to build a good user experience on a slow error-riddled product. And ultimately it is the top layer, Business value, that we care about. This leads to the "trick question" I will sometimes ask software testers and SDETs: What is your job? To which I answer:

Your job is to enable the creation of software that delivers value to the business. This is the job of the tester. It also happens to be the job of the developer, program manager, designer, manager, etc.

(I explore this idea a bit more here if you are interested) If you think about it, you might want to add to the above statement that you create this business value through:

- An experience that delights the users

- The production of high-quality software

True. It also turns out that these are respectively User Experience and System Health, the layers we are dependent on to build Business Value. An interesting note about that word "quality". "High-quality software" above means system health – that it does not break. But as Brent Jensen likes to ask, which is higher quality software:

- A. Perfect bug-less software that people do not use (or perhaps worse, they hate to use)

- B. Quirky software with a few glitches making millions happy (and making happy $millions)

If you believe the answer is B, then DDQ will appeal to you with its "Q" for "quality" happily spanning the pyramid above and not just system health. DDQ is about a confluence of what has been called Business Intelligence (BI) and quality. They are not really different things.

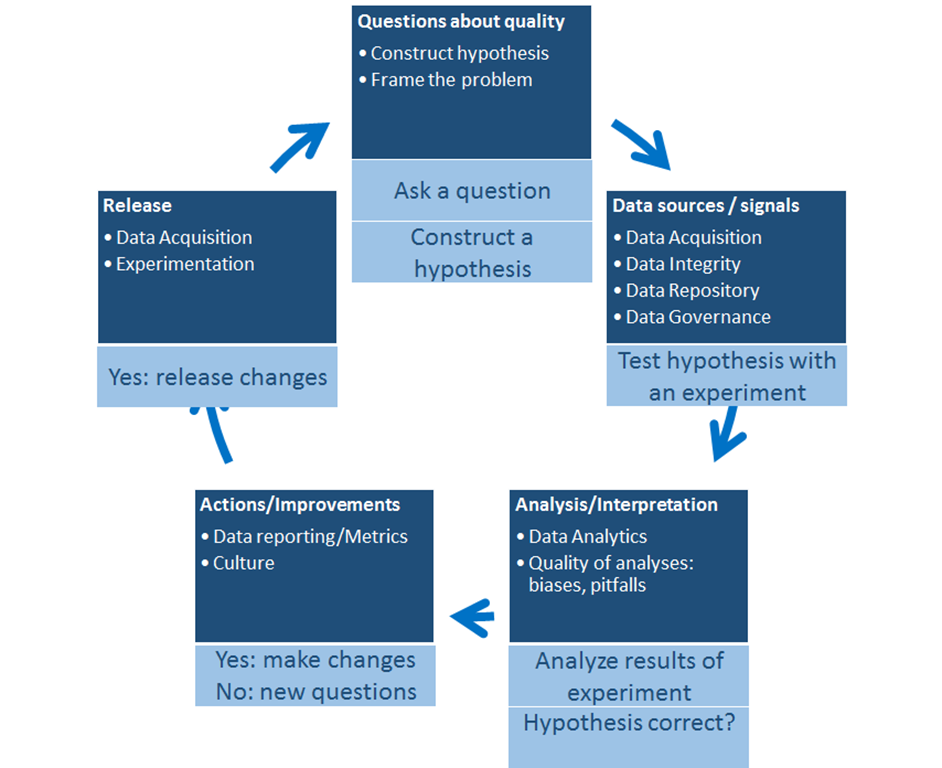

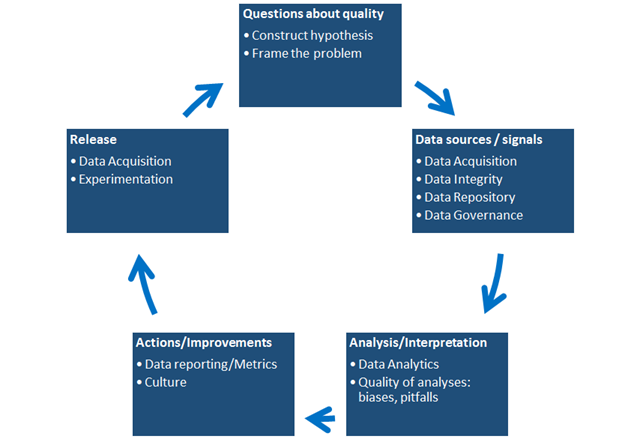

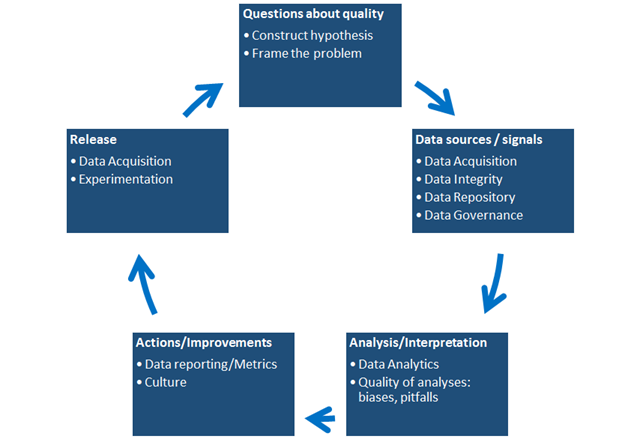

Asking the right questions is an important start, but is only one piece of the DDQ puzzle. DDQ works in an environment of iterative improvement (same as Agile). The faster we can spin around these cycles, the faster we improve our software. This is the DDQ Model:  I will leave it as an exercise to the reader to map the above to the scientific method. (I may help you out and do this in a future blog post.)

I will leave it as an exercise to the reader to map the above to the scientific method. (I may help you out and do this in a future blog post.)

Understanding our questions, the next step is to understand the data sources we can use to answer these questions. I will close by sharing a list of some of the types of data we can use below. You will note much of this data comes from production or near-production (think of private betas or internal dogfood). Production is a great environment to get data from as it is the most realistic environment for our software with real users doing real user things.

| Business value |

User experience |

System health |

| Is it successful? |

Is it useable? valuable? |

Is it available? reliable? performant? |

|

|

|

|

Acquisition

Adoption of a new feature,

New users, Unique users

Retention

Market share, Session duration, Repeat use

Monetization

Purchase, conversion, ad revenue

Minus: COGS, support costs

|

Usage Data

Feature use, task completion rates

Feedback (2nd person)

User ratings, User reviews

Sentiment Analysis (3rd person)

From: Twitter, Forums

|

Infrastructure data

Memory, CPU, Network

Application Data

Errors

Latency

MTTR (Meant Time to Recovery)

Compliance

Test Cases (run as part of pre-production test passes, or in production as monitors)

Correctness

Performance

Engineering Metrics (pre-production)

Code coverage

Code churn

Delivery cadence

|

Not covered

As you can see in the DDQ model, there is plenty more to cover. Besides the other boxes in that model, here are some other things that were NOT covered in this blog post

- How to determine specific scenarios to frame your questions for your software

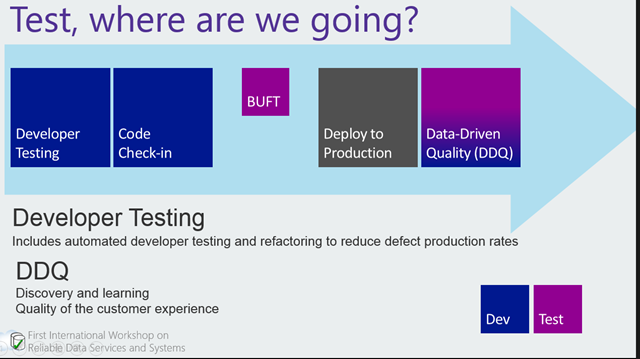

- How this fits into a comprehensive software development life cycle, and specifically the impact on BUFT (Big Up-Front Testing)

- Impact on team roles and responsibilities. Who does what?

- The future of the Tester/SDET role

- What do you need to know about actual Data Science?

- Tools

- Dashboards, and actionability

- Examples :-)

Further reading

If you want to learn more, I recommend the following:

- My former Microsoft colleague Steve Rowe has a great series of posts on DDQ

- Adding to the acronym soup, but definitely on target with DDQ, my often co-conspirator Ken Johnston explains EaaSy and MVQ.

- Although I had not quite tightened up the questions into the neat pyramid model above, I do fill in some of the blanks left by this blog post in my Roadmap to DDQ talk.

Finally wish to acknowledge and thank Monica Catunda who collaborated with me on much of this material, and co-delivered the Create Your Roadmap to Data-Driven Quality 1-day workshop at STPCon Nov 2014 in Denver.

decoupled codebase across thousands of servers in data centers around the world. Magic indeed… sometime black magic, but magic nonetheless.

decoupled codebase across thousands of servers in data centers around the world. Magic indeed… sometime black magic, but magic nonetheless.