Lies, damn lies, and statistics – Your guide to misleading correlations.

We should trust data. We certainly should trust data more than we trust the HiPPO. But when we dive head-first into the data looking for answers when we ourselves do not know the questions, that can be risky. After all, Goal, Question, Metric (GQM) was promoted (in that order) over 20 years ago. (here is the original GQM paper).

I said risky” but I did not say wrong. As an advanced technique the data wallow” can find insights we did not even know we were looking for. But the risk is there that you will find a misleading correlation.

There are three types of misleading correlations:

1. Confounding factors

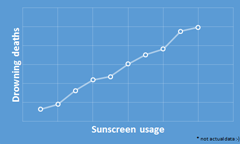

The first can be understood through the maxim correlation does not necessarily imply causation”. For example the following data are correlated

- Use of sunscreen

- Incidences of death due to drowning

Increased sunscreen usage is correlated to increased death by drowning. There is a correlation. But of course sunscreen usage does not CAUSE drowning. Instead they both have a common cause, warm sunny weather which results in both increased sunscreen usage and increased time spent swimming. Increased sunny weather is considered the confounding factor for the sunscreen/drowning correlation.

2. Spurious correlation

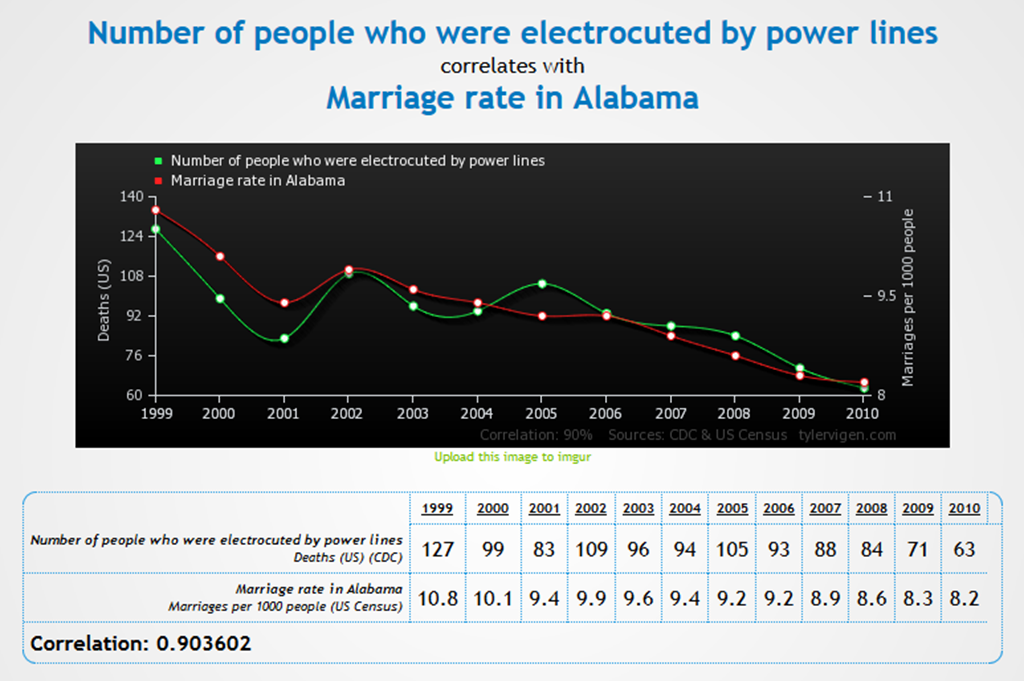

A world of gratitude goes to Tyler Vigen for educating the world about these. Here is but one example (unlike the sunscreen chart above, below IS actual data).

These are real statistical correlations, but are meaningless in every practical sense. They are simply correlated by chance. The sheer number of measurements in the world being huge, there will always be random correlations that emerge by chance.

3. Type I errors (Alpha risk)

A Type I error is a false positive. When designing a controlled experiment (or trial) to establish causation, we choose a significance level (called alpha) for the experiment. Using the common significance level of alpha=5%, if the data we observe would only be observed in 4% (or 4.9% or 4.99%) of the cases where there is NO causation, we conclude there IS indeed causation and report that. But this still means that there is a 4% (or 4.9% or 4.99% chance we are wrong in concluding there is causation, and this is a false positive or type I error. Of course lowering alpha for an experiment gives us more confidence in the results — the technical name for this is specificity”, but at the cost that we might miss some true correlations.

Call for feedback

I am sure the stats pros and data folks out there can provide me with any corrections or clarifications I might need to the above. Please feel free to do so in the comments.